We have created a new GHC blog at http://hackage.haskell.org/trac/ghc/blog. The new blog is part of our Trac and therefore should have lower overhead for posting, so hopefully we’ll use it more. I’ll leave this blog up though, it is probably more appropriate for longer or not-entirely-ghc-specific articles.

Parallel programming in Haskell with explicit futures

Recently we released a new version of the parallel package on Hackage, version 3.1.0.0. This synchronises the API to that described in our Haskell Symposium 2010 paper, “Seq no More: Better Strategies for Parallel Haskell“. If you don’t know what strategies are, I recommend the paper: it does have plenty of introductory material, as well as explaining the new improvements we made.

In this post I don’t want to focus on strategies, though. What has been bugging me about strategies is that, while the abstraction has some nice properties (compositionality, modularity), it does that at the expense of transparency. The problem is that it can be quite hard to reason about performance when using strategies, because you have to think quite carefully about when things are evaluated. In order to use a strategy, the input to the strategy has to be a data structure with some unevaluated components, that the strategy can exploit to create parallelism. Using laziness in this way is the key trick that allows strategies to be modular – indeed it’s an instance of the modularity insight from John Hughes’ famous “Why Functional Programming Matters” – but it can render the resulting program somewhat opaque to understanding the parallel execution.

What I plan to do in this post is introduce a simpler, more explicit, but less abstract, parallelism API that is implemented in a few lines on top of primitives provided by the parallel package. The new interface is still deterministic and pure: your parallel programs produce the same answer every time guaranteed, they don’t deadlock, and they don’t suffer from race conditions.

Let’s start by looking at one of the nice additions in the new strategies API, the Eval monad:

data Eval a = Done a instance Monad Eval where return x = Done x Done x >>= k = k x runEval :: Eval a -> a runEval (Done x) = x

I’ve included the implementation too, so you can see how simple it is. The Eval monad is just a “strict identity monad” – it doesn’t actually do anything in the usual sense of monads (there’s no state, error handling, or reading/writing), but what it does do is order things. When you write

do

x <- m

f x

you are guaranteed that m happens before f is called. This is all very well, but what does it mean for m to “happen”? You can’t do anything useful in this monad exept pass values around. The strategies library adds two useful primitives to the monad:

rseq :: a -> Eval a rpar :: a -> Eval a

these are what you need to describe parallel execution: rseq forces its argument to be evaluated, and rpar begins evaluation of its argument in parallel. The whole purpose of the Eval monad is to let you express an ordering between uses of rseq and rpar, so you can make them happen in the order you want. This is quite nice: we can be explicit about ordering by staying within the monad, and monads are nice and compositional. To illustrate this, when we introduce parallel Haskell we often start with an example like this:

let

a = primes !! 999

b = nfib 45

in

a `par` b `pseq` a + b

this is written using the “old” par and pseq operators, to say that a should be evaluated in parallel with b, and finally the result a + b should be returned. We can rewrite this using the Eval monad:

let

a = primes !! 999

b = nfib 45

in

runEval $ do

a' <- rpar a

b' <- rseq b

return (a' + b')

Ok, it’s longer, but it expresses more clearly that we intend to do the following things in order:

- start evaluation of

ain parallel, - evaluate

b - return the result

and with monads being compositional, we can build up larger strictly-ordered computations by composing smaller parts. This was difficult with par/pseq alone, because the ordering was not explicit.

So the Eval monad takes us in a profitable direction: it allows you to be more explicit about parallel execution, and thereby enable parallel programming to be a bit less hit-and-miss, at the expense of making the programmer write more code. But as it stands, the Eval monad doesn’t go quite far enough, for two reasons:

- while it’s clear where the fork point for

ais, it’s not so clear where the join point is, that is the point in the ordered computation where the value ofais eventually demanded. You can userseqto express a join point, but in the interests of being more explicit it would be better if the API forced you to write a join point and give an ordering between the joins for each parallel task. - the API doesn’t seem quite right for expressing nested parallel tasks: the argument to rpar is just a polymorphic value, so if we want to use the

Evalmonad in the parallel task we need to write anotherrunEval(or use thedotoperator of the strategies API, which essentially embedsrunEval).

So the API I’m going to propose that addresses these two issues is this:

fork :: Eval a -> Eval (Future a) join :: Future a -> Eval a

You might be familiar with this API: it’s a common parallelism abstraction, used in other languages such as Manticore and similar to what you can do in Cilk. The fork operation creates a parallel task, and join requests the result of a previously forked task. A forked task may or may not actually be evaluated in parallel – it depends on how many CPUs are actually available at runtime – but if not, then the evaluation is performed by join. Either way, the result of join is the same: since the Eval monad may not perform side effects, the API is deterministic.

It’s trival to implement this API on top of what we already have. Here you go:

data Future a = Future a fork :: Eval a -> Eval (Future a) fork a = do a' <- rpar (runEval a); return (Future a') join :: Future a -> Eval a join (Future a) = a `pseq` return a

We also need the rseq and rdeepseq operators from strategies; the point of the Eval monad is that we can say when we want things to be evaluated (in parallel).

I’ve written some examples using this API, and it works rather nicely. Here’s a snippet of an implementation of a parallel Black-Scholes implementation, that I modified from the version in the Haskell CnC distribution:

blackscholes :: Int -> Int -> Eval Float

blackscholes numOptions granularity =

do

fs <- forM [0, granularity .. numOptions-1] $ \t ->

fork (return (executeStep t granularity))

foldM (\ acc f ->

do x <- join f

return (acc + (x ! 0)))

0 fs

The first part, beginning forM, forks parallel tasks to evaluate each step of the computation, and the second part beginning foldM joins each parallel task, and combines the results. This implementation scales well, achieving a 7.5 speedup on the 8-core machine I’m using here, with the latest GHC HEAD (the full code is here).

The fork/join API I’ve described here is comparable in expressivity to Haskell CnC, indeed many of the Haskell CnC examples translate without too much difficulty and give similar performance. The main difference in the programming models is the join: in Haskell CnC you don’t have to thread the result of the fork to the join point, instead they both share an “Item collection” which is written by the parallel task, and read from to join.

However, this isn’t a replacement for CnC for (at least) two reasons:

- Haskell CnC is based its own scheduling abstraction which allows more flexibility in choosing a good scheduler for the application. In Haskell CnC you have more control over the runtime scheduling, and there is more scope to experiment with different schedulers. The fork/join API on the other hand is built directly on top of

par, and hence is tied to the GHC runtime’s scheduler for sparks. - fork/join is limited by the size of the spark pool, which by default is 4096, whereas Haskell CnC is not limited in this way. The limitation doesn’t affect the result, only the number of outstanding simultaneous parallel tasks. If more parallel tasks are spawned, earlier ones will be discaded.

I think where fork/join loses in flexibility and abstraction, it gains in simplicity and accessibility. If you’re learning parallelism in Haskell it might well be a good place to start.

Here’s the API in full:

module Future (Eval(..), Future, runEval, fork, join, rseq, rdeepseq) where import Control.DeepSeq import Control.Parallel import Control.Parallel.Strategies data Future a = Future a fork :: Eval a -> Eval (Future a) fork a = do a' <- rpar (runEval a); return (Future a') join :: Future a -> Eval a join (Future a) = a `pseq` return a

Yielding more improvements in parallel performance

GHC’s parallel GC makes heavy use of hand-written spinlocks. These are basically mutexes like those provided by the Unix pthreads API or equivalently Windows CrticicalSections, except that they have no support for blocking the thread and waking up, they just spin until the lock is acquired. I did it this way for a few reasons:

- I sometimes need to acquire a lock on one thread and release in on another. This is not supported by traditional mutexes.

- We expect all threads to be running and contentions to be short.

- I like to know exactly what code is running.

Unfortunately when using all the cores on the machine, it is common that one or more of our threads gets descheduled, and assuption (2) no longer holds. When this happens, one or more of the threads can be spinning waiting for the descheduled thread, and no progress is made (the CPU just gets warmer) until the OS decides to reschedule it, which might be a whole time slice.

This is something we knew about (see ticket #3553), but didn’t have a good solution for, and was recently encountered in another context here. It has been called the “last core parallel slowdown” and seems to affect Linux more than other OSs, presumably due to the way the Linux scheduler works. In my experience the effect is far more dramatic when using the 8th core of an 8-core box than when using the second core of a dual-core.

This problem was present in GHC 6.10, but is exacerbated in GHC 6.12 because we now do minor GCs in parallel, which can mean hundreds of all-core synchronisations per second. The reason we do minor GCs in parallel is for locality; the results in our ICFP’09 paper clearly show the performance benefits here.

Using traditional mutexes instead of our hand-rolled spinlocks would help, but it’s not as simple as just swapping out our spinlocks for mutexes: as noted above, sometimes we acquire one of these locks on

one thread and release it on another, and pthreads doesn’t allow that. It might be possible to restructure things such that this doesn’t happen, but I haven’t found a good way yet. An alternative is to use condition variables, but this lead to a severe reduction in performance when I tried it (see ticket #3553).

So as an experiment I tried adding an occasional call to ‘yield’ (sched_yield on Unix, SwitchToThread on Windows) inside the code that acquires a spinlock, with a tunable number of spins between each call to yield (I’m using 1000). I also added a ‘yield’ in the GC’s wait loop, so that threads with no work to do during parallel GC will repeatedly yield between searching for work.

To my surprise, this change helped not only the “last core” case, but also parallel performance across the board. I imagine one reason for this is that the yields help reduce contention on the memory bus,

particularly in the parallel GC.

Here are the results, measureing the benchmark programs used in our ICFP’09 paper. First, using all 8 cores of an 8-core, running 64-bit programs on Fedora 9, comparing GHC before and after the patch:

---------------------------

Program Elapsed

---------------------------

gray -40.3%

mandel -41.4%

matmult -12.7%

parfib -3.3%

partree -4.9%

prsa -14.6%

ray -10.9%

sumeuler -1.8%

---------------------------

Geometric Mean -17.7%

Now, using only 7 cores of the 8-core:

--------------------------

Program Elapsed

--------------------------

gray -15.6%

mandel -18.8%

matmult -5.5%

parfib +2.9%

partree -5.0%

prsa -9.3%

ray +1.7%

sumeuler -0.9%

--------------------------

Geometric Mean -6.6%

we even see a benefit when not using all the cores.

Here’s the difference between 7 and 8 cores, both with the new patch:

--------------------------

Program Elapsed

--------------------------

gray +39.1%

mandel -8.0%

matmult -4.1%

parfib -10.0%

partree -1.3%

prsa -15.3%

ray +37.3%

sumeuler -11.4%

--------------------------

Geometric Mean +1.5%

So the “last core” problem affects only two of our benchmarks (ray and gray), whereas the others all now improve when adding the last core.

This nicely illustrates the problem of extrapolating from a single benchmark – programs vary greatly in their behaviour, in my experience it’s impossible to find a single program that is “representative”. Using larger programs usually doesn’t help: often large programs tend to have small inner-loops. This group of 8 programs is quite meager, and expanding it is something I’d like to do (send me your parallel programs!).

Here’s the comparison on a dual-core, using 32-bit programs on Ubuntu Karmic, comparing GHC before and after the patch:

-------------------------

Program Elapsed

-------------------------

gray -17.2%

mandel -13.4%

matmult -6.7%

parfib +0.4%

partree -1.5%

prsa -1.0%

ray +1.6%

sumeuler -8.7%

-------------------------

Geometric Mean -6.0%

And since this is such a trivial patch, we can merge it into 6.12.2, which should hopefully be in the next Haskell Platform release.

This is really an interim solution, since the real solution is not to do “stop-the-world” GC at all, at least for minor collections. That’s something we’re also working on (hopefully for GHC 6.14), but in the meantime this patch gives some nice improvements for the 6.12 line, and shows that you can actually push a stop-the-world design quite a long way.

Parallelism /= Concurrency

If you want to make programs go faster on parallel hardware, then you need some kind of concurrency. Right?

In this article I’d like to explain why the above statement is false, and why we should be very clear about the distinction between concurrency and parallelism. I should stress that these ideas are not mine, and are by no means new, but I think it’s important that this issue is well understood if we’re to find a way to enable everyday programmers to use multicore CPUs. I was moved to write about this after reading Tim Bray’s articles on Concur.next: while I agree with a lot of what’s said there, particularly statements like

Exposing real pre-emptive threading with shared mutable data structures to application programmers is wrong

it seems that parallelism and concurrency are still being conflated. Yes we need concurrency in our languages, but if all we want to do is make programs run faster on a multicore, concurrency should be a last resort.

First, I’ll try to establish the terminology.

A concurrent program is one with multiple threads of control. Each thread of control has effects on the world, and those threads are interleaved in some arbitrary way by the scheduler. We say that a concurrent programming language is non-deterministic, because the total effect of the program may depend on the particular interleaving at runtime. The programmer has the tricky task of controlling this non-determinism using synchronisation, to make sure that the program ends up doing what it was supposed to do regardless of the scheduling order. And that’s no mean feat, because there’s no reasonable way to test that you have covered all the cases. This is regardless of what synchronisation technology you’re using: yes, STM is better than locks, and message passing has its advantages, but all of these are just ways to communicate between threads in a non-deterministic language.

A parallel program, on the other hand, is one that merely runs on multiple processors, with the goal of hopefully running faster than it would on a single CPU.

So where did this dangerous assumption that Parallelism == Concurrency come from? It’s a natural consequence of languages with side-effects: when your language has side-effects everywhere, then any time you try to do more than one thing at a time you essentially have non-determinism caused by the interleaving of the effects from each operation. So in side-effecty languages, the only way to get parallelism is concurrency; it’s therefore not surprising that we often see the two conflated.

However, in a side-effect-free language, you are free to run different parts of the program at the same time without observing any difference in the result. This is one reason that our salvation lies in programming languages with controlled side-effects. The way forward for those side-effecty languages is to start being more explicit about the effects, so that the effect-free parts can be identified and exploited.

It pains me to see Haskell’s concurrency compared against the concurrency support in other languages, when the goal is simply to make use of multicore CPUs (Edit: Ted followed up with a clarification). It’s missing the point: yes of course Haskell has the best concurrency support :-), but for this problem domain it has something even better: deterministic parallelism. In Haskell you can use multicore CPUs without getting your hands dirty with concurrency and non-determinism, without having to get the synchronisation right, and with a guarantee that the parallel program gives the same answer every time, just more quickly.

There are two facets to Haskell’s determinstic parallelism support:

- par/pseq and Strategies. These give you a way to add parallelism to an existing program, usually without requiring much restructuring. For instance, there’s a parallel version of ‘map’. Support for this kind of parallelism is maturing with the soon to be released GHC 6.12.1, where we made some significant performance improvements over previous versions.

- Nested Data Parallelism. This is for taking advantage of parallelism in algorithms that are best expressed by composing operations on (possibly nested) arrays. The compiler takes care of flattening the array structure, fusing array operations, and dividing the work amongst the available CPUs. Data-Parallel Haskell will let us take advantage of GPUs and many-core machines for large-scale data-parallelism in the future. Right now, DPH support in GHC is experimental, but work on it continues.

That’s not to say that concurrency doesn’t have its place. So when should you use concurrency? Concurrency is most useful as a method for structuring a program that needs to communicate with multiple external clients simultaneously, or respond to multiple asynchronous inputs. It’s perfect for a GUI that needs to respond to user input while talking to a database and updating the display at the same time, for a network application that talks to multiple clients simultaneously, or a program that communicates with multiple hardware devices, for example. Concurrency lets you structure the program as if each individual communication is a sequential task, or a thread, and in these kinds of settings it’s often the ideal abstraction. STM is vitally important for making this kind of programming more tractable.

As luck would have it, we can run concurrent programs in parallel without changing their semantics. However, concurrent programs are often not compute-bound, so there’s not a great deal to be gained by actually running them in parallel, except perhaps for lower latency.

Having said all this, there is some overlap between concurrency and parallelism. Some algorithms use multiple threads for parallelism deliberately; for example, search-type problems in which multiple threads search branches of a problem space, where knowledge gained in one branch may be exploited in other concurrent searches. SAT-solvers and game-playing algorithms are good examples. An open problem is how to incorporate this kind of non-deterministic parallelism in a safe way: in Haskell these algorithms would end up in the IO monad, despite the fact that the result could be deterministic. Still, I believe these kinds of problems are in the minority, and we can get a long way with purely deterministic parallelism.

You’ll be glad to know that with GHC you can freely mix parallelism and concurrency on multicore CPUs to your heart’s content. Knock yourself out 🙂

Heads up: what you need to know about Unicode I/O in GHC 6.12.1

The GHC 6.12.1 release candidate will be out shortly, and it includes a newly rewritten I/O library including Unicode support. Here’s what you need to know to make sure your applications/libraries continue to work with GHC 6.12.1.

We expect the release candidate phase to last a couple of weeks or so, depending on how many problems arise, after which 6.12.1 will be released. However, 6.12 is not currently scheduled to become part of the Haskell Platform until the next platform release, due around February 2010, so package authors have a grace period for testing before 6.12.1 becomes more widely used.

The new System.IO docs can be found here, in particular the unicode-related functionality is here.

Console and text I/O

If you are reading or writing to/from the console, or reading/writing text files in the local encoding, then use the System.IO functions for doing text I/O (openFile, readFile, hGetContents, putStr, etc.), and you will automatically benefit from the new Unicode support. Text written will be encoded according to the current locale, or code page on Windows, and text read will be decoded accordingly.

If you need to use a particular encoding (e.g. UTF-8), then the hSetEncoding function lets you set the encoding on a Handle, e.g.

hSetEncoding stdout utf8

Binary I/O

If you’re reading or writing binary data, or for some other reason you want to bypass the Unicode encoding/decoding that the IO library now does, you have two options:

- Use openBinaryFile or hSetBinaryMode to put the Handle into binary mode. No encoding/decoding or newline translation will be done.

- Use hGetBuf/hPutBuf, or the I/O operations provided by Data.ByteString, which all operate with binary data.

Using utf8-string

If you’re using utf8-string in certain ways then you might get incorrect results.

- The operations in System.IO.UTF8 add a UTF8 wrapper around the corresponding System.IO operation. Unless the underlying Handle is in binary mode, these operations will result in garbage being read or written. For example, if you want to use System.IO.UTF8.print, then call hSetBinaryMode stdout True first. Better still, just use System.IO.print directly. f you need to fix the encoding to UTF-8 rather than using the locale encoding, then call hSetEncoding handle utf8.

- The rest of the operations in utf8-string will continue to work as before.

Newline handling

There is a new API for newline translation in System.IO. By default, Handles in text mode translate newlines to or from the native representation for the current platform, that is “\r\n” on Windows and “\n” on other platforms. You can change this default using hSetNewlineMode, for example to be able to read a file with either Windows or Unix line-ending conventions:

hSetNewlineMode handle universalNewlineMode

where universalNewlineMode translates from “\r\n” to “\n” on input, leaving “\n” alone, and translates “\n” to the native newline representation on output.

GHC Status Update (from the Haskell Implementors Workshop)

The video of Simon Peyton Jones’ GHC Status Update presentation at the Haskell Implementors Workshop is now online. Lots of details about the goodies that will shortly be arriving in GHC 6.12.1.

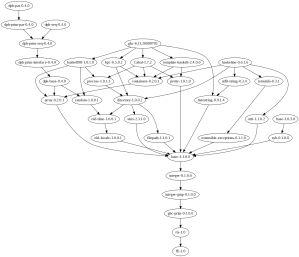

Visualising the Haskell package dependency graph

This is a graph showing the dependencies between the packages that come with GHC. I just added some (trivial) support to the ghc-pkg tool to generate the output in dot format, and generated the above graph with

ghc-pkg dot | tred | dot -Tsvg >pkgs.svg

Note the “tred” filter, which eliminates clutter from transitive edges. The ‘ghc-pkg dot’ command should be in GHC 6.12.1.

New paper: Parallel Performance Tuning for Haskell

Here’s our Haskell Symposium paper about parallel profiling with GHC and ThreadScope:

Parallel Performance Tuning for Haskell (Don Jones Jr., Simon Marlow, Satnam Singh) Haskell ’09: Proceedings of the second ACM SIGPLAN symposium on Haskell, Edinburgh, Scotland, ACM, 2009

Abstract:

Parallel Haskell programming has entered the mainstream with support now included in GHC for multiple parallel programming models, along with multicore execution support in the runtime. However, tuning programs for parallelism is still something of a black art. Without much in the way of feedback provided by the runtime system, it is a matter of trial and error combined with experience to achieve good parallel speedups.

This paper describes an early prototype of a parallel profiling system for multicore programming with GHC. The system comprises three parts: fast event tracing in the runtime, a Haskell library for reading the resulting trace files, and a number of tools built on this library for presenting the information to the programmer. We focus on one tool in particular, a graphical timeline browser called ThreadScope.

The paper illustrates the use of ThreadScope through a number of case studies, and describes some useful methodologies for parallelizing Haskell programs.

The new GHC build system is here!

The new GHC build system has been now been merged in. GHC developers can look forward to increases in productivity and faster build times thanks to the new non-recursive make design.

Here are some quick stats:

Lines of build-system code (including Makefile and Haskell code):

- old build system: 7793

- new build system: 5766 (about 2000 fewer lines, or a 26% reduction)

Furthermore, this doesn’t count the code for ‘cabal make’, which is still in Cabal but is no longer used by GHC.

Time to validate with -j2 (the default; test suite is still single-threaded):

- old: 28 mins

- new: 28 mins

Single and dual-core builds don’t see much difference. However, adding more cores starts to demonstrate the improved parallelism: validate with -j4 (still single-threaded test suite):

- old: 25.3 mins

- new: 24.0 mins

Parallelism in the new build system is a lot better. It can build libraries in parallel with each other, profiled libraries in parallel with non-profiled libraries, and even libraries in parallel with stage 2. There’s very little explicit ordering in the new build system, we only tell make about dependencies.

Time to do ‘make’ when the tree is fully up-to-date:

- old: 2m 41s

- new: 4.1s

Time to do ‘make distclen’:

- old: 5.7s

- new: 1.0s

We also have all-new build-system documentation.

The biggest change you’ll notice is that the build system now expresses all the dependencies, so whatever you change, you should be able to say ‘make’ to bring everything up to date. Sometimes this can result in more rebuilding than you were expecting, or more than is strictly necessary, but it should save time in the long run as we run

into fewer problems caused by things being inconsistent or out-of-date in the build.

We stretched GNU make to its limits. On the whole it performed pretty well: even for a build of this size, the time and memory consumed by make itself is negligible. The most annoying problem we encountered was the need to split the build into phases to work around GNU make’s lack of support for dependencies between included makefiles.

On the whole I’m now convinced that non-recursive make is not only useful but practical, provided you stick to some clear idioms in your build-system design.

New paper: Runtime Support for Multicore Haskell

Here’s a paper on the internals of GHC’s parallelism support, showing some nice improvements in parallel performance over GHC 6.10.1: “Runtime Support for Multicore Haskell“. Abstract:

Purely functional programs should run well on parallel hardware because of the absence of side effects, but it has proved hard to realise this potential in practice. Plenty of papers describe promising ideas, but vastly fewer describe real implementations with good wall-clock performance. We describe just such an implementation, and quantitatively explore some of the complex design tradeoffs that make such implementations hard to build. Our measurements are necessarily detailed and specific, but

they are reproducible, and we believe that they offer some general insights.